Abstract

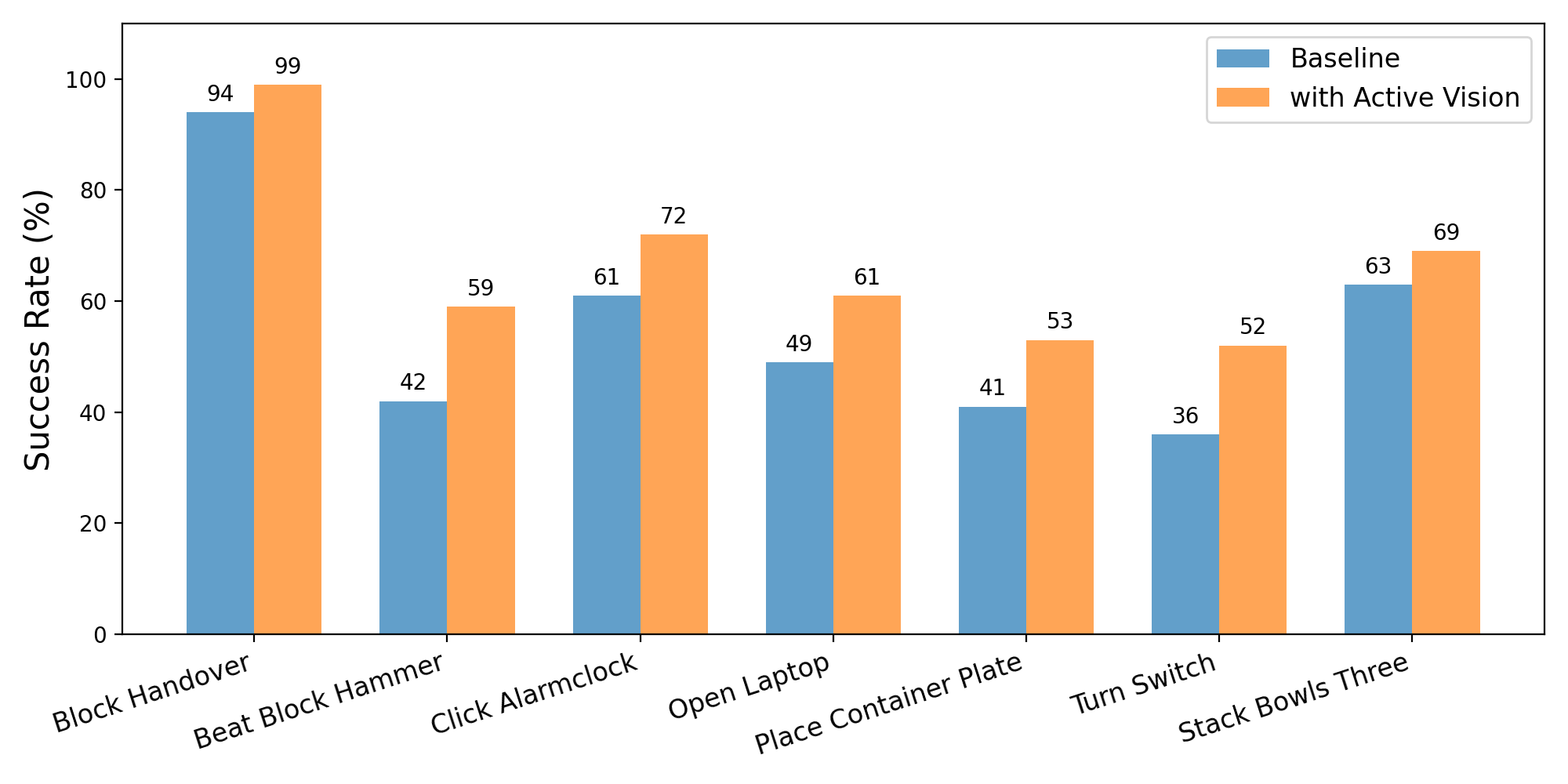

Robotic manipulation in complex scenes demands precise perception of task-relevant details, yet fixed or suboptimal viewpoints often limit resolution and induce occlusions, constraining imitation-learned policies. We present AVR (Active Vision-driven Robotics), a bimanual teleoperation and learning framework that unifies head-tracked viewpoint control (HMD-to-2-DoF gimbal) with motorized optical zoom to keep targets centered at an appropriate scale during data collection and deployment. In simulation, an AVR plugin augments RoboTwin demonstrations by emulating active vision (ROI-conditioned viewpoint change, aspect-ratio-preserving crops with explicit zoom ratios, and super-resolution), yielding 5–17% gains in task success across diverse manipulations. On our real-world platform, AVR improves success on most tasks, with more than 25% incease relative to static-view baseline. Extended studies show enhanced robustness under occlusion, clutter, and lighting disturbances, and generalization to unseen environments and objects.

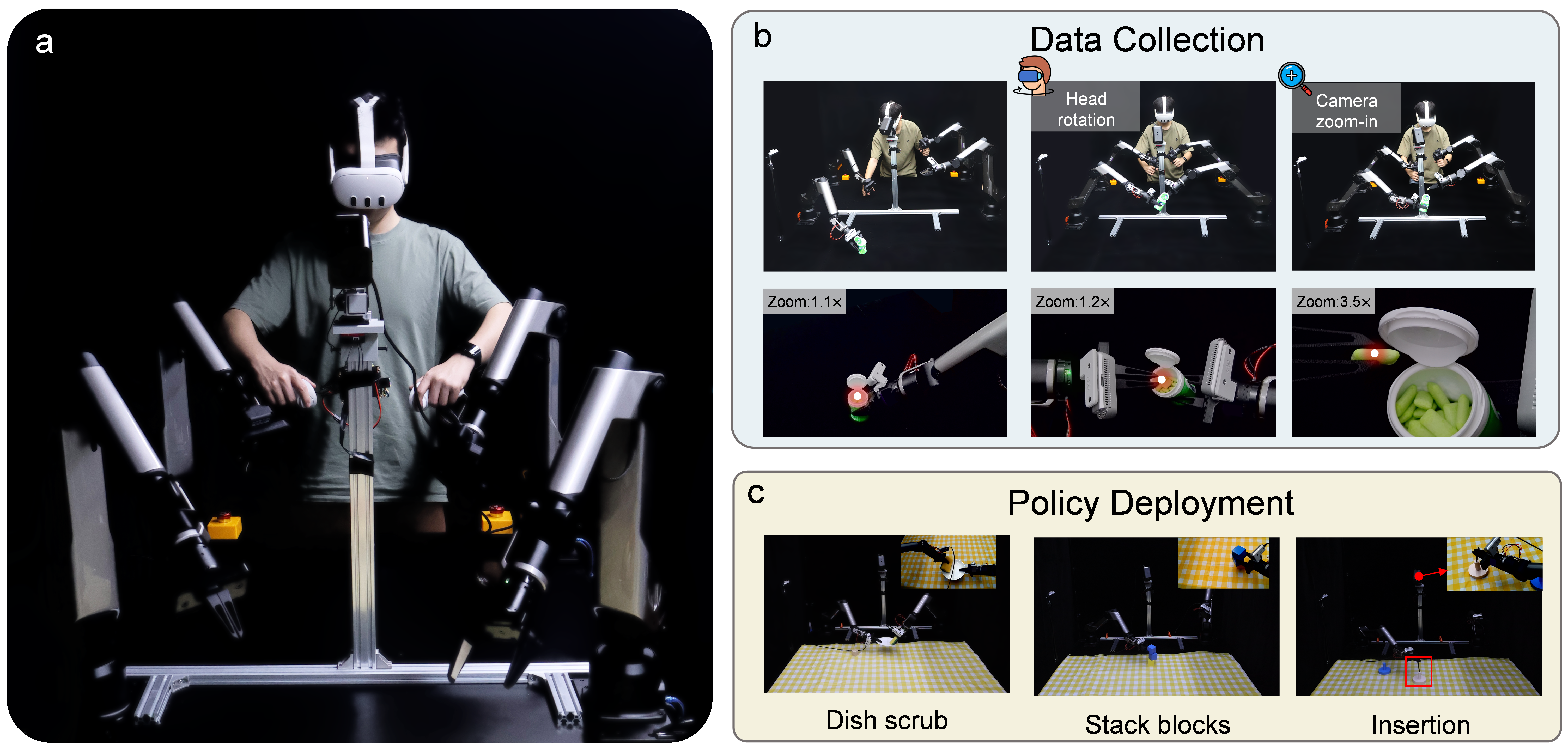

Hardware Platform

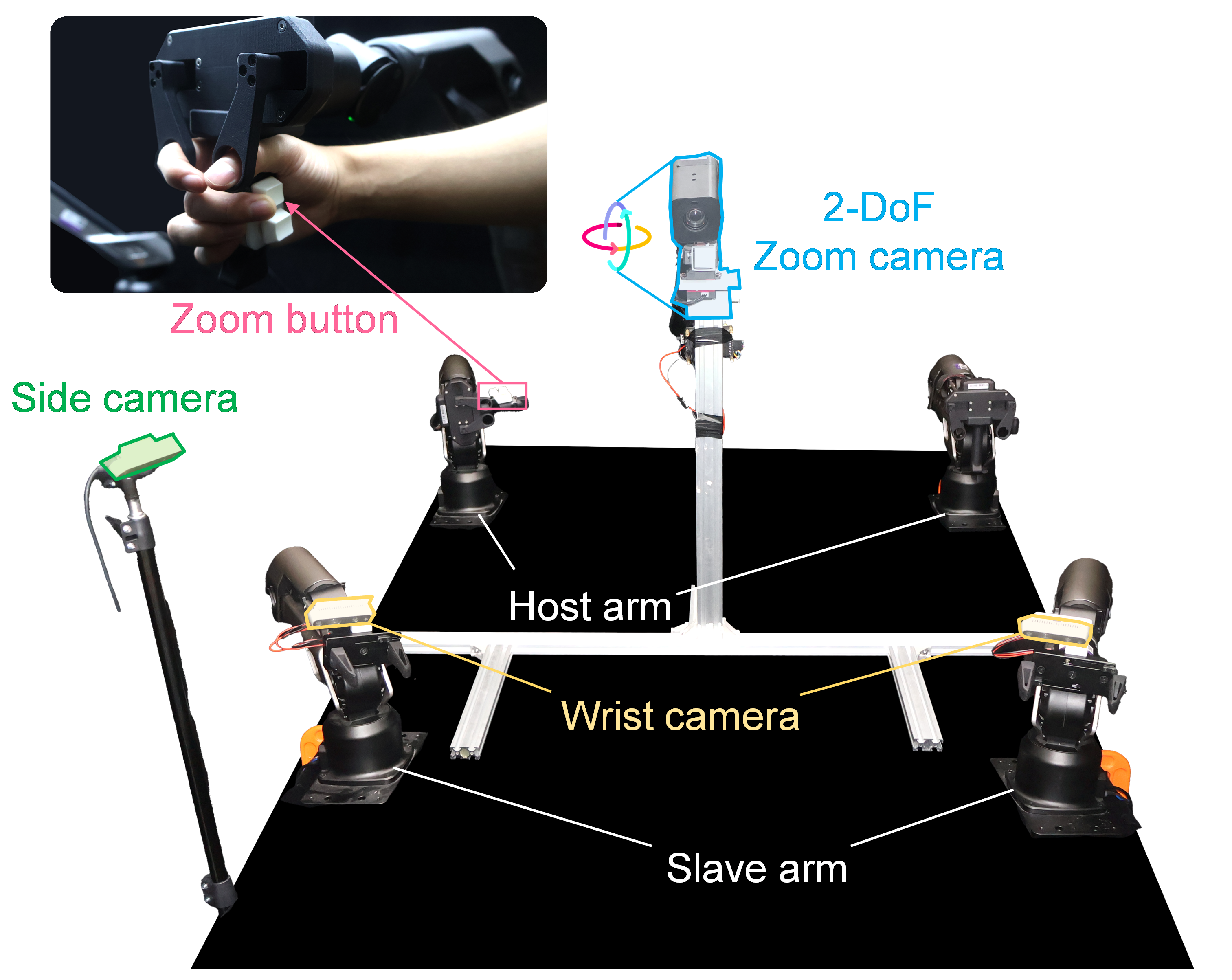

Our platform features two 6-DoF Galaxea robotic arms with parallel-jaw grippers; three Intel RealSense D435i depth cameras (side + wrists) for full workspace coverage; and a top-mounted active-vision unit—a motorized-zoom camera on a 2-DoF gimbal—that tracks operator head motion to deliver real-time visual feedback.

Data Collection

The top camera enables 2D viewpoint adjustment and dynamic zoom. Users rotate the VR headset to center the target and adjust zoom via keyboard or controller.

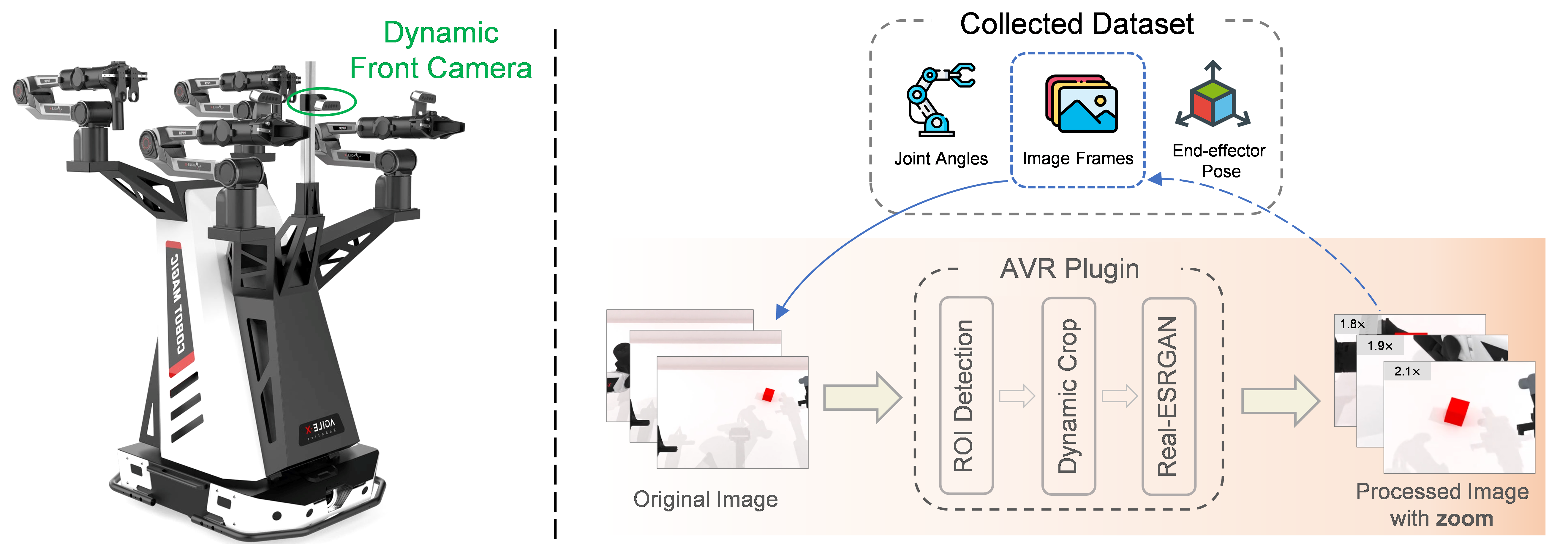

Simulation Evaluation

We design a RoboTwin-based simulation environment to evaluate the performance of our AVR system in various manipulation tasks. We take the front camera view from RoboTwin collected dataset as input, get detailed observation by ROI detection, aspect-ratio crop with zoom, and Real-ESRGAN super reconstruction. The processed images with zoom are appended to dataset as additional detailed observation for policy.

Our approach achieved a success rate improvement ranging from 5% to 17% across all tasks. These results further validate the effectiveness of our method in various task scenarios.

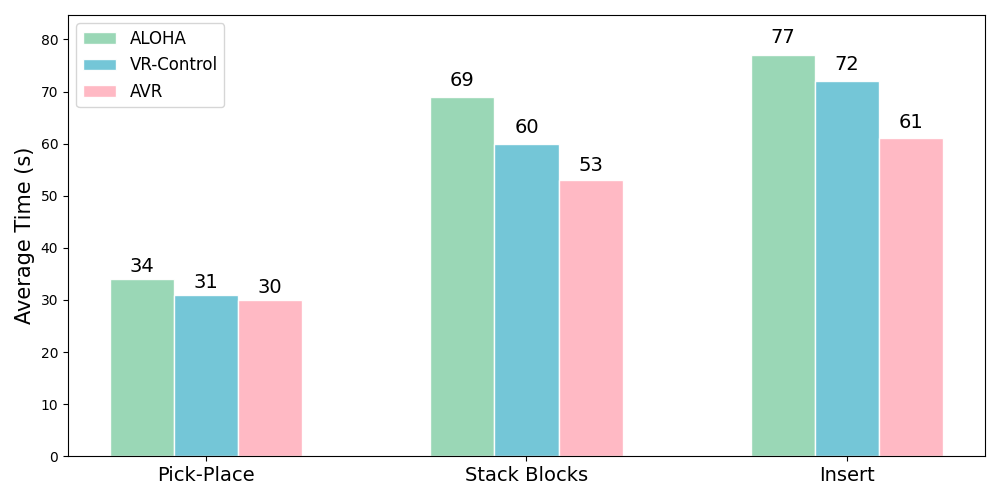

Real-world Experiments

We further design a series of experiments to evaluate the performance of AVR in various manipulation tasks.

We implemented different tasks in various scenarios, including pick-place handover, clothes folding, plates scurbing, three blocks stacking,

and inserting a screwdriver (0.5cm diameter) into a small hole (0.75cm diameter).

We show some of our deploy results, espcially the screwdriver insert task with uncut and real-time speed (1x).

Screwdriver Insert (1x)

Pick-place Handover

Fold Clothes

Plate Scurb

Stack Blocks

Results

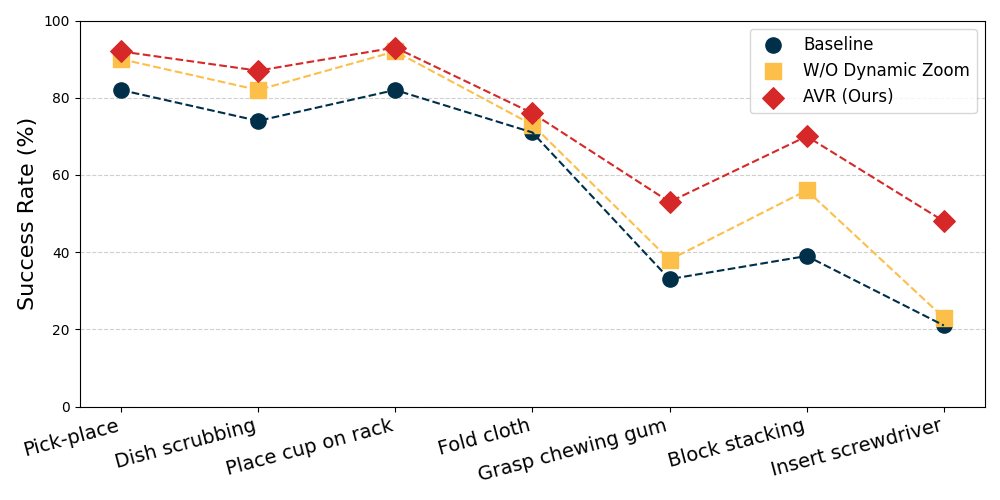

Extended Studies

We evaluate our method through multiple quantitative experiments, including ablation study, robustness assessment, and generalization validation. We show some of our results videos in the real robot experiments.

Third View

First View

Failures

We also show some failures of our system in the real robot experiments.😅

Conclusion and Future Work

We present the AVR framework, which leverages dynamic viewpoint and focal length adjustments in active vision to enhance precise manipulation.

It provides intuitive teleoperation and a reliable data collection workflow, enabling consistent viewpoint and zoom control for stable and accurate operations.

Experiments in simulation and the real world show task success rate improvements of 5–17%, and over 25% gains in precision tasks, clearly surpassing conventional imitation learning.

Extended tests further confirm enhanced robustness under occlusion, clutter, and lighting changes, as well as stronger generalization to unseen environments and objects.

Looking ahead, we plan to improve data collection efficiency with AR glasses and data gloves to capture human perception without teleoperation,

enhance viewpoint control through better gimbal mechanisms and richer sensing (e.g., wrist cameras),

and develop instruction-conditioned policies to guide active perception.

BibTex

@misc{liu2025avractivevisiondrivenrobotic,

title={AVR: Active Vision-Driven Robotic Precision Manipulation with Viewpoint and Focal Length Optimization},

author={Yushan Liu and Shilong Mu and Xintao Chao and Zizhen Li and Yao Mu and Tianxing Chen and Shoujie Li and Chuqiao Lyu and Xiao-ping Zhang and Wenbo Ding},

year={2025},

eprint={2503.01439},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2503.01439},

}